How I Built a Budget Server That Packs a Punch Part 2

Moving Digital Furniture Without Breaking Anything

Ah yes, the fun part of building a new server—migration. If hardware assembly feels like building IKEA furniture, service migration is more like moving a fully decorated house, room by room, hoping nothing fragile gets dropped along the way. My older HP Z600 had accumulated quite the collection of services—Docker containers, web servers, Apache configs, and some stray gremlins I’m sure were hiding in the corner.

The first step was mapping everything out. Which services needed to be isolated for security? Which ones needed to stay online even when I was tinkering with something else? And most importantly, which services were unnecessarily running in host mode and could now finally be wrangled into neat little Docker containers? Plex, CrowdSec, and Glances were all freeloading in host mode on the old server, and that wasn’t going to fly anymore.

So, let’s break down how I approached this migration and what the new server is hosting.

Docker Server: My Personal Service Playground

This is where most of my personal Docker services live, alongside all my dev/staging WordPress instances. It’s essentially the sandbox for all my experiments, side projects, and apps I absolutely needed to self-host because… well, reasons.

Some notable residents of this server include:

- 40 WordPress installations (because one blog is never enough).

- SFTPGO, which serves as my backup server for all WordPress sites via UpdraftPlus.

- AriaNG for managing downloads.

- FreshRSS & Feedropolis to pretend I’m still organized about my readings.

- Actual Budget & Firefly III for financial management (or at least, financial awareness).

- Glances for system monitoring.

- Nextcloud for file storage.

- Immich for photo and video management.

- Plex for transcoding Linux ISOs (wink).

- And, of course, Portainer to keep this digital circus in order.

Basically, if it can run in a container and I can tinker with it, it lives here.

Docker Server for Clients: No Downtime Allowed

This is the “serious” Docker server. It hosts client projects, production WordPress sites, and APIs that absolutely cannot go down every time I decide to reboot my main Docker playground. This server also runs Portainer Agent, allowing me to monitor and manage it remotely as part of a node cluster.

It’s like the quiet, responsible sibling of my chaotic personal Docker server.

Web Server: Apache Makes a Comeback

Originally, I thought about switching over to Traefik during the migration. But after some testing, I realized Traefik is essentially just a traffic cop—it routes domains to the right ports, but it doesn’t handle PHP or virtual hosts, which are essential for my setup.

So, I stuck with good ol’ Apache2. This time, though, I made sure to properly set up Mod-Security2 for added protection. I also installed Docker to run MySQL and PostgreSQL databases, which are much easier to manage in containers.

Migration was surprisingly smooth. A simple rsync to move the files, a mysqldump from the HP Z600, and importing those SQL files into the Docker database containers on the new server did the trick. Nothing exploded, and Apache fired up without throwing tantrums.

LXCs: The Lightweight Champions

I’m currently running three LXC containers, each serving a very specific purpose:

- Cloudflared for secure tunneling.

- AdGuard Home for network-wide ad blocking.

- Uptime Kuma (running two instances) for service monitoring.

These were relatively easy to set up. For Cloudflared and AdGuard Home, I used community scripts available for ProxmoxVE. As for Uptime Kuma, I opted for a rootless Docker setup to keep things clean and isolated.

The three containers fit perfectly into the free three-node limitation of the Proxmox Business Plan. It’s almost like I planned it.

Docker Migration: The Good, The Bad, and The Database

Migrating most Docker services was blissfully simple—it was mostly a matter of copying volumes over using rsync. Here’s the command I used:

sudo rsync -avogh --info=progress2 folder/file user@new-server-ip:path

Breakdown of the options

The -a option copy files recursively and preserve ownership of files when files are copied.

-v runs the verbose version of the command; all of the processes that are run will be written out for the user to read

-o preserves the owner of the files (since I’m using sudo and some docker volumes are mapped as root:root)

-g preserves the group, same reason as the above.

-h output numbers in a human-readable format

–info=progress2 just makes the transfer information more readable

The Problematic Transfers

But not everything went smoothly. Databases threw a wrench into my plans. Instead of just copying MySQL or PostgreSQL data folders, I realized it was far safer to export the databases using mysqldump, set up fresh containers, and then import the SQL files back in. This ensured data integrity and saved me from a potential cascade of permission-related headaches.

1. Nextcloud: A Comedy of Disk Mapping Errors

In theory, migrating Nextcloud should’ve been a simple shift-and-lift operation. In practice? Not so much.

When mounting disks in Proxmox using the following command:

qm set <vm-id> -virtio<id> /dev/sd<disk>

Next is to mount the right disks at the right place. I have quite a convoluted disk mapping of Nextcloud as follows.

vda 9.1T 0 disk nextcloud/data

vdb 9.1T 0 disk nextcloud/data/maya/files/linux-iso-1

vdc 9.1T 0 disk nextcloud/data/maya/files/linux-iso-2

vdd 9.1T 0 disk nextcloud/data/maya/files/linux-iso-3

I managed to mess up the mapping. Instead of pointing the first disk to nextcloud/data, I accidentally pointed it to nextcloud/. Cue hours of debugging, hair-pulling, and the quiet sobbing of lost ISOs.

Lesson learned: triple-check disk mappings before committing.

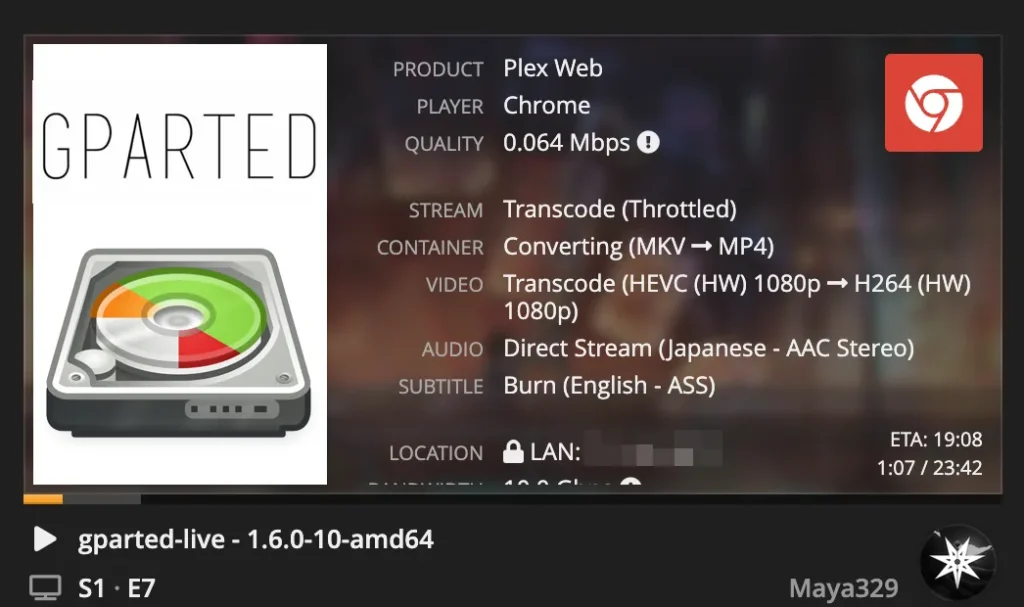

2. Plex: Claim Key Drama and GPU Passthrough Shenanigans

The Migration

Previously, Plex was running as a host service on my old HP Z600, casually freeloading on the system like a roommate who eats your food and never cleans up. Moving it into a Docker container felt like the right move—more isolation, easier updates, and fewer spaghetti config files lying around.

But Plex, in all its wisdom, requires a Claim Key when you move servers. No key? No Plex. And Plex doesn’t make this process easy if your server isn’t running locally. After scrolling through forums, swearing at error messages, and questioning my life choices, I stumbled upon uglymagoo’s plex-claim-server GitHub project. Shoutout to that hero—seriously. Without it, I’d probably still be staring at a blinking login screen, wondering if Plex had become self-aware and was actively gaslighting me.

Instructions for claiming in case GitHub was taken offline

The Plex Media Server setup requires you to link your new server to your plex.tv account. This is done by initially accessing your server from “the same network” [1]. This can be a little “complicated” on a remote headless server if you have never heard of an “ssh tunnel” before.

The script in this git repo just uses the same mechanics that are used by the official Plex Docker image [2]. In fact, the script is just a refactored version of the Docker script.

- Make the script executable:

chmod +x plex-claim-server.sh - Get your Plex Claim Code (it’s only valid some minutes): https://www.plex.tv/claim/

- Claim your server:

sudo -u plex ./plex-claim-server.sh "$YOUR_CLAIM_CODE" - Restart your Plex Media Server, e.g. with

sudo systemctl restart plexmediaserver

Set the environment variable PLEX_MEDIA_SERVER_APPLICATION_SUPPORT_DIR to tell the script about your non-default Plex setup.

[1] https://support.plex.tv/articles/200288586-installation/#toc-2

Copy of plex-claim-server.sh

#!/bin/bash

# If we are debugging, enable trace

if [ "${DEBUG,,}" = "true" ]; then

set -x

fi

function getPref {

local key="$1"

sed -n -E "s/^.*${key}=\"([^\"]*)\".*$/\1/p" "${prefFile}"

}

function setPref {

local key="$1"

local value="$2"

count="$(grep -c "${key}" "${prefFile}")"

count=$(($count + 0))

if [[ $count > 0 ]]; then

sed -i -E "s/${key}=\"([^\"]*)\"/${key}=\"$value\"/" "${prefFile}"

else

sed -i -E "s/\/>/ ${key}=\"$value\"\/>/" "${prefFile}"

fi

}

home="$(echo ~plex)"

pmsApplicationSupportDir="${PLEX_MEDIA_SERVER_APPLICATION_SUPPORT_DIR:-${home}/Library/Application Support}"

prefFile="${pmsApplicationSupportDir}/Plex Media Server/Preferences.xml"

PLEX_CLAIM="$1"

# Create empty shell pref file if it doesn't exist already

if [ ! -e "${prefFile}" ]; then

echo "Creating pref shell"

mkdir -p "$(dirname "${prefFile}")"

cat > "${prefFile}" <<-EOF

<?xml version="1.0" encoding="utf-8"?>

<Preferences/>

EOF

chown -R plex:plex "$(dirname "${prefFile}")"

fi

# Setup Server's client identifier

serial="$(getPref "MachineIdentifier")"

if [ -z "${serial}" ]; then

serial="$(uuidgen)"

setPref "MachineIdentifier" "${serial}"

fi

clientId="$(getPref "ProcessedMachineIdentifier")"

if [ -z "${clientId}" ]; then

clientId="$(echo -n "${serial}- Plex Media Server" | sha1sum | cut -b 1-40)"

setPref "ProcessedMachineIdentifier" "${clientId}"

fi

# Get server token and only turn claim token into server token if we have former but not latter.

token="$(getPref "PlexOnlineToken")"

if [ ! -z "${PLEX_CLAIM}" ] && [ -z "${token}" ]; then

echo "Attempting to obtain server token from claim token"

loginInfo="$(curl -X POST \

-H 'X-Plex-Client-Identifier: '${clientId} \

-H 'X-Plex-Product: Plex Media Server'\

-H 'X-Plex-Version: 1.1' \

-H 'X-Plex-Provides: server' \

-H 'X-Plex-Platform: Linux' \

-H 'X-Plex-Platform-Version: 1.0' \

-H 'X-Plex-Device-Name: PlexMediaServer' \

-H 'X-Plex-Device: Linux' \

"https://plex.tv/api/claim/exchange?token=${PLEX_CLAIM}")"

token="$(echo "$loginInfo" | sed -n 's/.*<authentication-token>\(.*\)<\/authentication-token>.*/\1/p')"

if [ "$token" ]; then

setPref "PlexOnlineToken" "${token}"

echo "Plex Media Server successfully claimed"

fi

fi

GPU Passthrough for Encoding

Now came the second boss fight: GPU passthrough. Because what’s Plex without smooth transcoding? Basically just a glorified file browser.

The first step was enabling IOMMU in Proxmox, configuring GPU passthrough, and performing the sacred ritual of rebooting five times while holding my breath. I followed a simple guide written by /u/cjalas on Reddit, which was clear, concise, and didn’t assume I was an absolute potato (thanks, buddy).

To make sure the GPU passthrough was actually working, I passed it through to a Windows VM first. Because if it works on Windows, it’ll usually work everywhere else. After confirming that the GPU was behaving itself, I unceremoniously detached it from Windows and attached it to my Docker server VM.

Next up was getting the GPU to play nice with Ubuntu 24.04. Time to dive into driver installation.

Backup of Reddit post

Ultimate Beginner’s Guide to Proxmox GPU Passthrough

Welcome all, to the first installment of my Idiot Friendly tutorial series! I’ll be guiding you through the process of configuring GPU Passthrough for your Proxmox Virtual Machine Guests. This guide is aimed at beginners to virtualization, particularly for Proxmox users. It is intended as an overall guide for passing through a GPU (or multiple GPUs) to your Virtual Machine(s). It is not intended as an all-exhaustive how-to guide; however, I will do my best to provide you with all the necessary resources and sources for the passthrough process, from start to finish. If something doesn’t work properly, please check /r/Proxmox, /r/Homelab, /r/VFIO, or /r/linux4noobs for further assistance from the community.

Before We Begin (Credits)

This guide wouldn’t be possible without the fantastic online Proxmox community; both here on Reddit, on the official forums, as well as other individual user guides (which helped me along the way, in order to help you!). If I’ve missed a credit source, please let me know! Your work is appreciated.

Disclaimer: In no way, shape, or form does this guide claim to work for all instances of Proxmox/GPU configurations. Use at your own risk. I am not responsible if you blow up your server, your home, or yourself. Surgeon General Warning: do not operate this guide while under the influence of intoxicating substances. Do not let your cat operate this guide. You have been warned.

Let’s Get Started (Pre-configuration Checklist)

It’s important to make note of all your hardware/software setup before we begin the GPU passthrough. For reference, I will list what I am using for hardware and software. This guide may or may not work the same on any given hardware/software configuration, and it is intended to help give you an overall understanding and basic setup of GPU passthrough for Proxmox only.

Your hardware should, at the very least, support: VT-d, interrupt mapping, and UEFI BIOS.

My Hardware Configuration:

Motherboard: Supermicro X9SCM-F (Rev 1.1 Board + Latest BIOS)

CPU: LGA1150 Socket, Xeon E3-1220 (version 2) 1

Memory: 16GB DDR3 (ECC, Unregistered)

GPU: 2x GTX 1050 Ti 4gb, 2x GTX 1060 6gb 2

My Software Configuration:

Latest Proxmox Build (5.3 as of this writing)

Windows 10 LTSC Enterprise (Virtual Machine) 3

Notes:

1On most Xeon E3 CPUs, IOMMU grouping is a mess, so some extra configuration is needed. More on this later.

2It is not recommended to use multiple GPUs of the same exact brand/model type. More on this later.

3Any Windows 10 installation ISO should work, however, try to stick to the latest available ISO from Microsoft.

Configuring Proxmox

This guide assumes you already have at the very least, installed Proxmox on your server and are able to login to the WebGUI and have access to the server node’s Shell terminal. If you need help with installing base Proxmox, I highly recommend the official “Getting Started” guide and their official YouTube guides.

Step 1: Configuring the Grub

Assuming you are using an Intel CPU, either SSH directly into your Proxmox server, or utilizing the noVNC Shell terminal under “Node”, open up the /etc/default/grub file. I prefer to use nano, but you can use whatever text editor you prefer.

nano /etc/default/grubLook for this line:

GRUB_CMDLINE_LINUX_DEFAULT="quiet"Then change it to look like this:

For Intel CPUs:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on"For AMD CPUs:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on"IMPORTANT ADDITIONAL COMMANDS

You might need to add additional commands to this line, if the passthrough ends up failing. For example, if you’re using a similar CPU as I am (Xeon E3-12xx series), which has horrible IOMMU grouping capabilities, and/or you are trying to passthrough a single GPU.

These additional commands essentially tell Proxmox not to utilize the GPUs present for itself, as well as helping to split each PCI device into its own IOMMU group. This is important because, if you try to use a GPU in say, IOMMU group 1, and group 1 also has your CPU grouped together for example, then your GPU passthrough will fail.

Here are my grub command line settings:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction nofb nomodeset video=vesafb:off,efifb:off"For more information on what these commands do and how they help:

A. Disabling the Framebuffer: video=vesafb:off,efifb:off

B. ACS Override for IOMMU groups: pcie_acs_override=downstream,multifunction

When you finished editing /etc/default/grub run this command:

update-grubStep 2: VFIO Modules

You’ll need to add a few VFIO modules to your Proxmox system. Again, using nano (or whatever), edit the file /etc/modules

nano /etc/modulesAdd the following (copy/paste) to the /etc/modules file:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfdThen save and exit.

Step 3: IOMMU interrupt remapping

I’m not going to get too much into this; all you really need to do is run the following commands in your Shell:

echo "options vfio_iommu_type1 allow_unsafe_interrupts=1" > /etc/modprobe.d/iommu_unsafe_interrupts.conf

echo "options kvm ignore_msrs=1" > /etc/modprobe.d/kvm.confStep 4: Blacklisting Drivers

We don’t want the Proxmox host system utilizing our GPU(s), so we need to blacklist the drivers. Run these commands in your Shell:

echo "blacklist radeon" >> /etc/modprobe.d/blacklist.conf

echo "blacklist nouveau" >> /etc/modprobe.d/blacklist.conf

echo "blacklist nvidia" >> /etc/modprobe.d/blacklist.confStep 5: Adding GPU to VFIO

Run this command:

lspci -vYour shell window should output a bunch of stuff. Look for the line(s) that show your video card. It’ll look something like this:

01:00.0 VGA compatible controller: NVIDIA Corporation GP104 [GeForce GTX 1070] (rev a1) (prog-if 00 [VGA controller])

01:00.1 Audio device: NVIDIA Corporation GP104 High Definition Audio Controller (rev a1)

Make note of the first set of numbers (e.g. 01:00.0 and 01:00.1). We’ll need them for the next step.

Run the command below. Replace 01:00 with whatever number was next to your GPU when you ran the previous command:

lspci -n -s 01:00Doing this should output your GPU card’s Vendor IDs, usually one ID for the GPU and one ID for the Audio bus. It’ll look a little something like this:

01:00.0 0000: 10de:1b81 (rev a1)

01:00.1 0000: 10de:10f0 (rev a1)

What we want to keep, are these vendor id codes: 10de:1b81 and 10de:10f0.

Now we add the GPU’s vendor id’s to the VFIO (remember to replace the id’s with your own!):

echo "options vfio-pci ids=10de:1b81,10de:10f0 disable_vga=1"> /etc/modprobe.d/vfio.confFinally, we run this command:

update-initramfs -uAnd restart:

resetNow your Proxmox host should be ready to passthrough GPUs!

Configuring the VM (Windows 10)

Now comes the ‘fun’ part. It took me many, many different configuration attempts to get things just right. Hopefully my pain will be your gain, and help you get things done right, the first time around.

Step 1: Create a VM

Making a Virtual Machine is pretty easy and self-explanatory, but if you are having issues, I suggest looking up the official Proxmox Wiki and How-To guides.

For this guide, you’ll need a Windows ISO for your Virtual Machine. Here’s a handy guide on how to download an ISO file directly into Proxmox. You’ll want to copy ALL your .ISO files to the proper repository folder under Proxmox (including the VirtIO driver ISO file mentioned below).

Example Menu Screens

General => OS => Hard disk => CPU => Memory => Network => Confirm

IMPORTANT: DO NOT START YOUR VM (yet)

Step 1a (Optional, but RECOMMENDED): Download VirtIO drivers

If you follow this guide and are using VirtIO, then you’ll need this ISO file of the VirtIO drivers to mount as a CD-ROM in order to install Windows 10 using VirtIO (SCSI).

For the CD-Rom, it’s fine if you use IDE or SATA. Make sure CD-ROM is selected as the primary boot device under the Options tab, when you’re done creating the VM. Also, you’ll want to make sure you select VirtIO (SCSI, not VirtIO Block) for your Hard disk and Network Adapter.

Step 2: Enable OMVF (UEFI) for the VM

Under your VM’s Options Tab/Window, set the following up like so:

Boot Order: CD-ROM, Disk (scsi0)

SCSI Controller: VirtIO SCSI Single

BIOS: OMVF (UEFI)Don’t Forget: When you change the BIOS from SeaBIOS (Default) to OMVF (UEFI), Proxmox will say something about adding an EFI disk. So you’ll go to your Hardware Tab/Window and do that. Add > EFI Disk.

Step 3: Edit the VM Config File

Going back to the Shell window, we need to edit /etc/pve/qemu-server/<vmid>.conf, where <vmid> is the VM ID Number you used during the VM creation (General Tab).

nano /etc/pve/qemu-server/<vmid>.confIn the editor, let’s add these command lines (doesn’t matter where you add them, so long as they are on new lines. Proxmox will move things around for you after you save):

machine: q35

cpu: host,hidden=1,flags=+pcid

args: -cpu 'host,+kvm_pv_unhalt,+kvm_pv_eoi,hv_vendor_id=NV43FIX,kvm=off'Save and exit the editor.

Step 4: Add PCI Devices (Your GPU) to VM

Under the VM’s Hardware Tab/Window, click on the Add button towards the top. Then under the drop-down menu, click PCI Device.

Look for your GPU in the list, and select it. On the PCI options screen, you should only need to configure it like so:

All Functions: YES

Rom-Bar: YES

Primary GPU: NO

PCI-Express: YES (requires 'machine: q35' in vm config file)Here’s an example image of what your Hardware Tab/Window should look like when you’re done creating the VM.

Oopsies, make sure “All Functions” is CHECKED.

Step 4a (Optional): ROM File Issues

In the off chance that things don’t work properly at the end, you MIGHT need to come back to this step and specify the ROM file for your GPU. This is a process unto itself, and requires some extra steps, as outlined below.

Step 4a1:

Download your GPU’s ROM file

OR

Dump your GPU’s ROM File:

cd /sys/bus/pci/devices/0000:01:00.0/

echo 1 > rom

cat rom > /usr/share/kvm/<GPURomFileName>.bin

echo 0 > romAlternative Methods to Dump ROM File:

Step 4a2: Copy the ROM file (if you downloaded it) to the /usr/share/kvm/ directory.

You can use SFTP for this, or directly through Windows’ Command Prompt:

scp /path/to/<romfilename>.rom myusername@proxmoxserveraddress:/usr/share/kvm/<romfilename>.romStep 4a3: Add the ROM file to your VM Config (EXAMPLE):

hostpci0: 01:00,pcie=1,romfile=<GTX1050ti>.romNVIDIA USERS: If you’re still experiencing issues, or the ROM file is causing issues on its own, you might need to patch the ROM file (particularly for NVIDIA cards). There’s a great tool for patching GTX 10XX series cards here: https://github.com/sk1080/nvidia-kvm-patcher and here https://github.com/Matoking/NVIDIA-vBIOS-VFIO-Patcher. It only works for 10XX series though. If you have something older, you’ll have to patch the ROM file manually using a hex editor, which is beyond the scope of this tutorial guide.

Example of the Hardware Tab/Window, Before Windows 10 Installation.

Step 5: START THE VM!

We’re almost at the home stretch! Once you start your VM, open your noVNC / Shell Tab/Window (under the VM Tab), and you should see the Windows installer booting up. Let’s quickly go through the process, since it can be easy to mess things up at this junction.

Final Setup: Installing / Configuring Windows 10

Copyright(c) Jon Spraggins (https://jonspraggins.com)

If you followed the guide so far and are using VirtIO SCSI, you’ll run into an issue during the Windows 10 installation, when it tries to find your hard drive. Don’t worry!

Copyright(c) Jon Spraggins (https://jonspraggins.com)

Step 1: VirtIO Driver Installation

Simply go to your VM’s Hardware Tab/Window (again), double click the CD-ROM drive file (it should currently have the Windows 10 ISO loaded), and switch the ISO image to the VirtIO ISO file.

Copyright(c) Jon Spraggins (https://jonspraggins.com)

Tabbing back to your noVNC Shell window, click Browse, find your newly loaded VirtIO CD-ROM drive, and go to the vioscsi > w10 > amd64 sub-directory. Click OK.

Now the Windows installer should do its thing and load the Red Hat VirtIO SCSI driver for your hard drive. Before you start installing to the drive, go back again to the VirtIO CD-Rom, and also install your Network Adapter VirtIO drivers from NetKVM > w10 > amd64 sub-directory.

Copyright(c) Jon Spraggins (https://jonspraggins.com)

IMPORTANT #1: Don’t forget to switch back the ISO file from the VirtIO ISO image to your Windows installer ISO image under the VM Hardware > CD-Rom.

When you’re done changing the CD-ROM drive back to your Windows installer ISO, go back to your Shell window and click Refresh. The installer should then have your VM’s hard disk appear and have windows ready to be installed. Finish your Windows installation.

IMPORTANT #2: When Windows asks you to restart, right click your VM and hit ‘Stop’. Then go to your VM’s Hardware Tab/Window, and Unmount the Windows ISO from your CD-Rom drive. Now ‘Start’ your VM again.

Step 2: Enable Windows Remote Desktop

If all went well, you should now be seeing your Windows 10 VM screen! It’s important for us to enable some sort of remote desktop access, since we will be disabling Proxmox’s noVNC / Shell access to the VM shortly. I prefer to use Windows’ built-in Remote Desktop Client. Here’s a great, simple tutorial on enabling RDP access.

NOTE: While you’re in the Windows VM, make sure to make note of your VM’s Username, internal IP address and/or computer name.

Step 3: Disabling Proxmox noVNC / Shell Access

To make sure everything is properly configured before we get the GPU drivers installed, we want to disable the built-in video display adapter that shows up in the Windows VM. To do this, we simply go to the VM’s Hardware Tab/Window, and under the Display entry, we select None (none) from the drop-down list. Easy. Now ‘Stop’ and then ‘Start’ your Virtual Machine.

NOTE: If you are not able to (re)connect to your VM via Remote Desktop (using the given internal IP address or computer name / hostname), go back to the VM’s Hardware Tab/Window, and under the PCI Device Settings for your GPU, checkmark Primary GPU**. Save it, then ‘Stop’ and ‘Start’ your VM again.**

Step 4: Installing GPU Drivers

At long last, we are almost done. The final step is to get your GPU’s video card drivers installed. Since I’m using NVIDIA for this tutorial, we simply go to http://nvidia.com and browse for our specific GPU model’s driver (in this case, GTX 10XX series). While doing this, I like to check Windows’ Device Manager (under Control Panel) to see if there are any missing VirtIO drivers, and/or if the GPU is giving me a Code 43 Error. You’ll most likely see the Code 43 error on your GPU, which is why we are installing the drivers. If you’re missing any VirtIO (usually shows up as ‘PCI Device’ in Device Manager, with a yellow exclamation), just go back to your VM’s Hardware Tab/Window, repeat the steps to mount your VirtIO ISO file on the CD-Rom drive, then point the Device Manager in Windows to the CD-Rom drive when it asks you to add/update drivers for the Unknown device.

Sometimes just installing the plain NVIDIA drivers will throw an error (something about being unable to install the drivers). In this case, you’ll have to install using NVIDIA’s crappy GeForce Experience(tm) installer. It sucks because you have to create an account and all that, but your driver installation should work after that.

Congratulations!

After a reboot or two, you should now be able to see NVIDIA Control Panel installed in your Windows VM, as well as Device Manager showing no Code 43 Errors on your GPU(s). Pat yourself on the back, do some jumping jacks, order a cake! You’ve done it!

Multi-GPU Passthrough, it CAN be done!

Credits / Resources / Citations

- https://pve.proxmox.com/wiki/Pci_passthrough

- https://forum.proxmox.com/threads/gpu-passthrough-tutorial-reference.34303/

- https://vfio.blogspot.com/2014/08/iommu-groups-inside-and-out.html

- https://forum.proxmox.com/threads/nvidia-single-gpu-passthrough-with-ryzen.38798/

- https://heiko-sieger.info/iommu-groups-what-you-need-to-consider/

- https://heiko-sieger.info/running-windows-10-on-linux-using-kvm-with-vga-passthrough/

- http://vfio.blogspot.com/2014/08/vfiovga-faq.html

- https://passthroughpo.st/explaining-csm-efifboff-setting-boot-gpu-manually/

- http://bart.vanhauwaert.org/hints/installing-win10-on-KVM.html

- https://jonspraggins.com/the-idiot-installs-windows-10-on-proxmox/

- https://pve.proxmox.com/wiki/Windows_10_guest_best_practices

- https://docs.fedoraproject.org/en-US/quick-docs/creating-windows-virtual-machines-using-virtio-drivers/index.html

- https://nvidia.custhelp.com/app/answers/detail/a_id/4188/~/extracting-the-geforce-video-bios-rom-file

- https://www.overclock.net/forum/69-nvidia/1523391-easy-nvflash-guide-pictures-gtx-970-980-a.html

- https://medium.com/@konpat/kvm-gpu-pass-through-finding-the-right-bios-for-your-nvidia-pascal-gpu-dd97084b0313

- https://www.groovypost.com/howto/setup-use-remote-desktop-windows-10/

Thank you everyone!

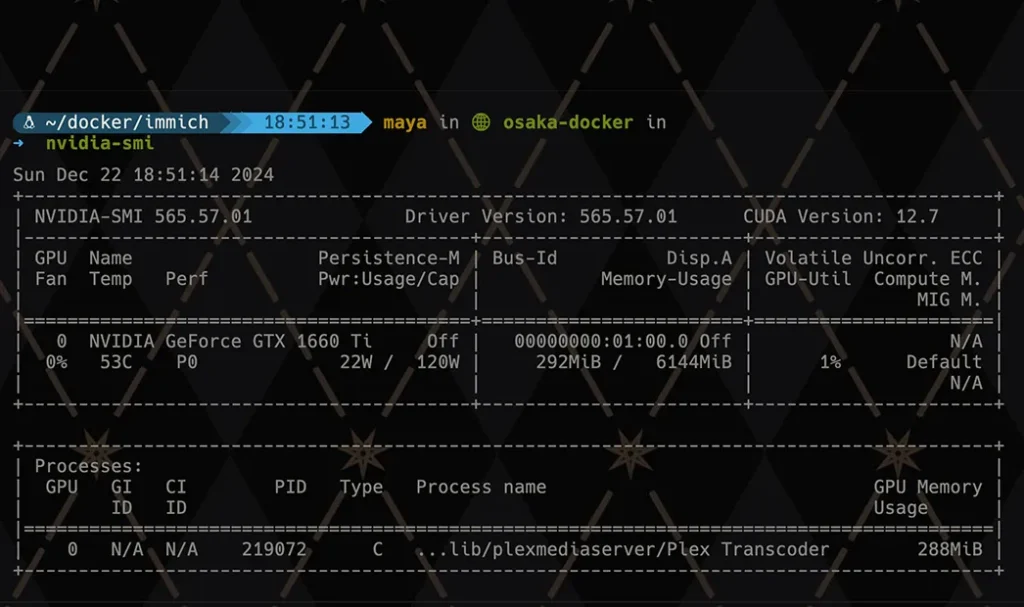

# Search apt for NVIDIA drivers. At the time of writing the latest driver is 565.

sudo apt search nvidia-driver

# Install the headless server drivers and NVidia Utills.

sudo apt install nvidia-headless-565-server libnvidia-encode-565-server nvidia-utils-565-server -y

After a reboot (because NVIDIA drivers always require a reboot—it’s tradition), I ran:

➜ nvidia-smi

Sun Dec 22 18:27:55 2024

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 565.57.01 Driver Version: 565.57.01 CUDA Version: 12.7 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA GeForce GTX 1660 Ti Off | 00000000:01:00.0 Off | N/A |

| 0% 41C P8 5W / 120W | 3MiB / 6144MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

And there it was, the beautiful sight of a working NVIDIA GeForce GTX 1660 Ti, happily reporting temps, power usage, and memory stats. No errors, no crashes, no smoke coming out of the case—just pure, GPU-powered bliss.

But wait, Plex still needed Docker integration.

Enabling GPU Passthrough in Docker

For NVIDIA drivers to work in Docker, I needed the NVIDIA Container Toolkit. Installing it involved a few more commands:

# Add the gpgpkey and repository

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg && curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

# Update and install NVIDIA Container Toolkit

sudo apt-get update

sudo apt-get install -y nvidia-container-toolkit

# Configure NVIDIA Container Toolkit

sudo nvidia-ctk runtime configure --runtime=docker

sudo systemctl restart docker

# Test GPU integration within Docker

docker run --gpus all nvidia/cuda:11.5.2-base-ubuntu20.04 nvidia-smi

After another quick test with:

docker run --gpus all nvidia/cuda:11.5.2-base-ubuntu20.04 nvidia-smi

I finally saw the same GPU stats from within the Docker container. Success.

Now, it was time to set up the Docker Compose file for Plex.

Docker Compose Configuration for Plex

Here’s the important part: the linuxserver.io Plex image is a must-have for GPU passthrough. The official Plex image doesn’t play well with NVIDIA hardware encoding.

In my docker-compose.yml, I added the following:

services:

plex:

image: lscr.io/linuxserver/plex:latest

...

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

ports:

...

environment:

...

- VERSION=docker

- NVIDIA_VISIBLE_DEVICES=all

- NVIDIA_DRIVER_CAPABILITIES=compute,video,utility

hostname: ...

volumes:

...

After that, I ran:

docker compose down && docker compose up --force-recreate --build -d

The moment of truth came when I fired up Plex, picked a high-bitrate ISO file (because why not stress-test it immediately), and watched as Tautulli reported hardware transcoding in action. A quick glance at nvidia-smi also showed Plex happily munching on GPU resources.

NVIDIA GPU Driver Patch

By default, NVIDIA’s drivers will restrict the maximum number of simultaneous NVENC encoding sessions on consumer-grade GPUs. This means you will be restricted to only be able to server a handful of concurrent streams with Plex using the GPU. Applying this patch will fix that.

And That’s a Wrap!

After days of tweaking, migrating, and occasionally muttering curses at error messages, the server is finally up and running. CPU temps are low, load averages are stable, and the RAM usage sits comfortably around 75–80% (I’ve reserved 128GB for Docker-Server and 64GB for Client-Docker-Server).

The GPU, despite being a workhorse for Plex, rarely spins its fans above 60°C. Everything feels stable, snappy, and ready to handle whatever I throw at it next.

Sure, there were a few hiccups along the way (looking at you, disk mappings), but overall? I’d say this migration was a success. Now, onto the next adventure—probably adding even more containers. Because I can, and also, why not?

Comment (1):

sicbo

This blog is a true gem, delivering high-quality, insightful, and engaging content that keeps readers coming back for more. The articles are well-researched, thoughtfully written, and cover a diverse range of topics, ensuring there’s always something new to explore. The writing is both informative and enjoyable, making even complex subjects easy to understand. The blog’s clean design and intuitive navigation make browsing a seamless experience. It’s clear that a lot of passion and expertise go into every post. Whether you’re looking for inspiration, knowledge, or entertainment, this blog is an excellent choice. Highly recommended!